|

Submitted by , posted on 24 November 2004

|

|

Image Description, by

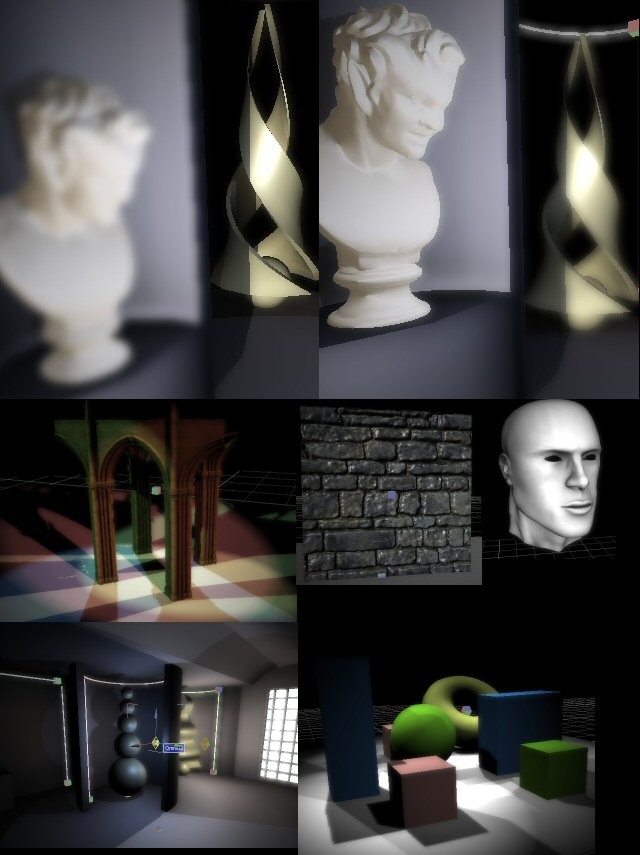

This is a series of screenshots of the Deferred Shading Renderer I'm working

on.

For each row:

Two renderings from two close points of view, with a different

depth-of-field.

A four spots rendering with shadows. A parallax mapping wall. A face with

ambient occlusion rendering.

An indoor scene rendering, the whole scene has an ambient occlusion map.

And another multi-lights scene with soft shadows rendering.

About the renderer:

Actually Shader Model 3.0 compliant only, there's not much work to do to

make it work on the ATI Chips. :)

Four Rendering Targets are used, each one has a depth of 32 bits:

Albedo, it stores the computed albedo using the graphical object's

shader.

Z, it stores the 32bits depth of the pixel, that's a shame we can't

use the Z-Buffer for that.well.

Normal, it stores the X and Y components of the pixel's normal, the Z

is computed on the fly.

Material settings, actually 8bits components for Specular Intensity,

Specular Power, Ambient Occlusion Factor and last one is free.

Some random features: Gamma correction, optimized light rendering using

projected bounding volumes, HDR (still in progress), Soft or hard shadow

mapping on Point/Spot light (direct, being in progress, damn PSM.),

NormalMap/Parallax mapping, Ambient Occlusion Mapping, Standard Lambert

Lighting (I'll write a new set of custom lights more suitable and faster

later), Depth of Field, Projected Textures.

About the deferred shading:

Many people think what is good about Deferred Shading are the early pixel

culling and doing the lighting in screen space. Of course, these are good

things compared to the standard way when you have to do a first pass to fill

the Z-Buffer, and then ideally just one more pass to light everything.

My point of view is slightly different, I of course like these benefits, but

what I enjoy the most about Deferred Shading is the simplicity it enables in

many aspects of the real-time rendering.

You have three separated stages of rendering: the MRTs creation, the

lighting, and the post-process/tone-mapping.

This is so clear, and so.right (similar to broadcast renderers). You can

write complex shaders for the albedo computation, different lighting models,

and have fun with post-process. Each stage has its own set of HLSL shaders,

and it's highly pluggable/scalable (which is a priority for the technology

I'm working on). The whole architecture can be strong and stable.

Concerning the speed of rendering, it relies on pixel power mainly, and we

know this is going to improve a lot. You have some effects like

Depth-of-Field almost for free (done during tone mapping). Look at the

timings on my log page to get a better idea.

There's a lot more to talk about the architecture of this technique, and its

real benefits, but I don't want to make this description too long! (I know,

that's already the case! :) )

Some links:

My log page with more screenshots:

http://www.elixys.com/prog/SM3Renderer.html

Description of the whole development environment:

http://www.elixys.com/elixys/iriontech/apidetails.html

|

|